The Great Untapped GPU Reserve

The Great Untapped GPU Reserve

We're witnessing an unprecedented arms race for AI data center capacity. Organizations are in fierce competition for specialized hardware, throwing billions into expansion while the semiconductor supply chain strains to meet demand.

How long that race lasts remains to be seen but given how throttled even the paid LLM experiences are today, it feels like it will be a marathon and not a sprint.

As an end user hitting usage limits, I desperately want to run the models on my own dedicated hardware from my home office. I'm about 64GB of VRAM short of being able to do this without extensive quantization for the more complex tasks, but this is not the case for tasks in the 3-10b parameter range where the infrastructure issue is more about compute than memory. There are lots of smaller interesting models and lots of tasks like image generation that can be achieved on discrete GPUs today and in the near future we can imagine consumer desktop GPUs with expanded VRAM and models with fewer parameters churning out impressive results.

What if we could distribute the AI workload over a network of idle devices?

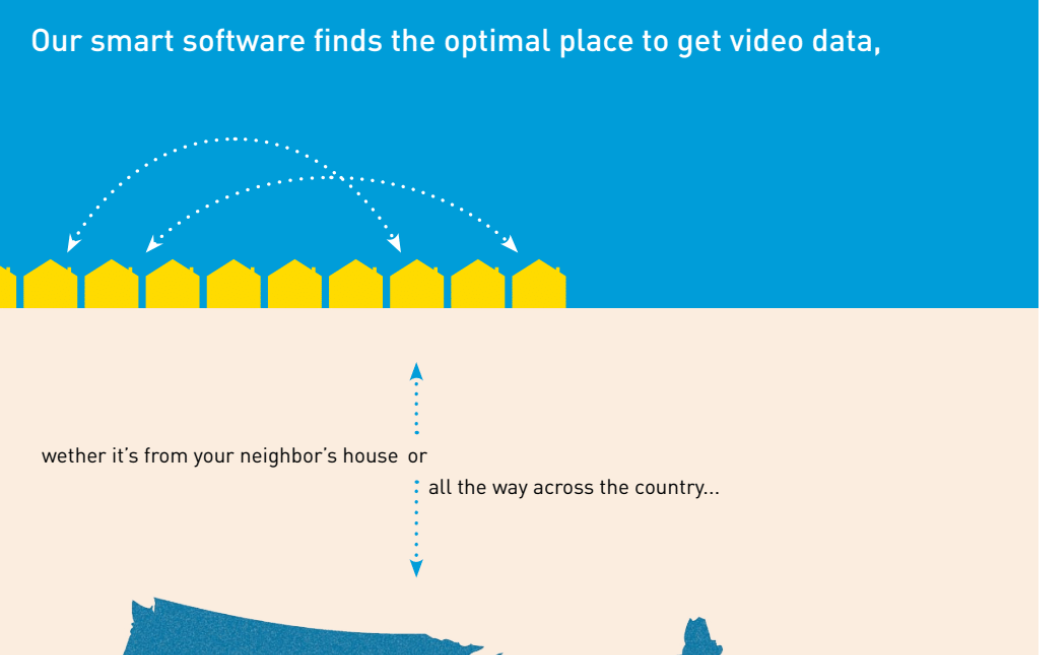

Having built a startup around distributed computing in the late 2000s, I can't help but see a striking parallel to a time when what we call "the cloud" today didn't really exist at scale. At the time streaming HD video to millions of users around the globe was impossibly expensive and the server infrastructure was being built long after the software was ready to take advantage of it. Back then we tried to solve it by caching large files to local disks and streaming them on a mesh network in chunks without going through a central data center. Bit torrent had proven this was an effective way to get HD video out to millions of people at no cost to the distributors. It turned out Hollywood contract law was the only thing slower than the data center build out.

Even still the idea was technologically compelling, a million customers with a terabyte of storage dedicated to video at their home, coordinated by software, was one hell of a data center in 2010.

There are quite a few nVidia RTX 40xx and RTX 50xx sitting idle most of the time at people's homes and offices around the world. They're needed for gaming, CAD, amateur crypto mining, all sorts of other applications, but how large is this in comparison to the entire AI infrastructure that Google and Amazon have?

Let's do some back-of-the-napkin math. According to Steam Hardware Survey data, approximately 0.89% of their 130 million monthly active users own an NVIDIA RTX 4090. Factor in RTX 4080/4080 Super owners, and we're looking at roughly 3-4 million high-end GPUs in consumer hands for online PC gaming alone.

Each RTX 4090 packs 24GB of VRAM and delivers about 83 TFLOPS of FP16 compute, while RTX 4080 variants offer 16GB and around 65 TFLOPS (NVIDIA specs). Do the multiplication and you get some eye-popping numbers:

- Total potential memory: 60-80 petabytes

- Total potential compute: 200-300 exaFLOPS (FP16)

For context, this computational capacity likely exceeds what any single cloud provider has dedicated to AI infrastructure. Google Cloud's TPU v4 pods each contain 4,096 chips with about 128TB of high-bandwidth memory. Even if Google operates 10-20 such pods, their total dedicated HBM capacity would be around 1.3-2.6 petabytes—dramatically less than our distributed consumer GPU pool.

That's a lot of computational power sitting idle most of the time.

But wait, there must be a catch

Not all AI workloads would be suitable for distributed execution. Training large foundation models requires specialized hardware and low-latency communication—that's still data center territory. But I'd argue that many commercially important workloads have potential:

- Inference tasks: Text generation, embeddings, classification

- Media generation: Think DALL-E, Stable Diffusion, audio synthesis

- Batch processing: Feature extraction, data transformation

- Parameter exploration: Distributed hyperparameter optimization

These workloads share a crucial characteristic: they can be broken into independent units with minimal inter-node communication. Recent research on task partitioning for distributed inference shows we can effectively deploy complex models across heterogeneous computing resources.

Building a Data Center Without Building a Data Center

The real genius of distributed computing isn't just the raw horsepower—it's the capital efficiency. Building a new hyperscale data center is a monstrous undertaking:

- Real estate: $10-20 million

- Construction: $200-1,000 per square foot

- Power infrastructure: $10-15 million for substations

- Hardware: $10,000-15,000 per GPU server node

- Personnel: $1-2 million annually

- Regulatory hurdles: Endless permitting, environmental studies...

All told, Synergy Research Group estimates a new hyperscale facility requires $1-3 billion upfront with 2-5 year construction timelines.

Distributed computing? It sidesteps virtually all of this. The hardware is already purchased. The power infrastructure exists. The real estate is spoken for. The cooling solutions are in place. You're essentially building a data center without building a data center.

Instead of massive capital expenditures, you're looking at software that can be developed as you go and revenue sharing with clients that opt into the network.

Traditional data centers, for all their technical marvels, represent remarkably inefficient allocation of capital:

- Lumpy investments: You can't buy 1/10th of a data center

- Geographic constraints: You're limited by power, cooling, and connectivity

- Utilization paradox: Resources are provisioned for peak demand, not average use

In contrast, distributed computing can introduce sophisticated market mechanisms. Using spot pricing models similar to those employed by cloud providers, a distributed platform could achieve far higher utilization while providing price signals that naturally incentivize participation during high-demand periods.

Think of it as the Uber model for compute resources—connecting idle capacity with urgent demand through price signals and technology.

A distributed computing network inherits the remarkable resilience properties of other decentralized systems. Much like BitTorrent or cryptocurrency networks, a well-designed system becomes extraordinarily difficult to disrupt.

This resilience takes on new significance in our current environment of geopolitical tensions affecting semiconductor supply chains and increasing regulatory scrutiny of AI infrastructure. A distributed network crossing jurisdictional boundaries would be virtually impossible to shut down entirely—think of it as computational antifragility.

Is there enough margin?

For this to work, compensation needs to exceed opportunity costs for participants.

- Electricity: ~300-450W per high-end GPU, or $0.05-0.15 per hour

- Wear and tear: Some decrease in hardware lifespan

- Opportunity cost: Can't use your PC for other tasks during participation

Based on current cloud GPU pricing, similar computational resources cost $1.50-4.00 per hour on major providers. Even with significant discounting to attract participants (say $0.40-0.70 per hour), the earnings look attractive:

- 8 hours daily participation: $3.20-5.60 daily

- Monthly: $96-168

- Annual: $1,152-2,016

For many PC enthusiasts, that's the difference between their expensive hardware being a pure expense and being a revenue-generating asset that pays for itself within 12-18 months. I have 4 kids with PCs that would all get excited about a new RTX 5090. That would be an $8,000 investment for me up front but I'd be getting $480/mo in returns. If I buy new GPUs every 2 years, that is a 30% effective annual rate of return.

The Software Side: Making It All Work

The technical implementation would require several key components:

- Client software: Secure, lightweight application to manage resources and workloads

- Workload scheduler: Smart distribution system accounting for node capabilities

- Payment system: Accurate tracking and compensation

- Security sandbox: Protecting both provider and user systems

Recent advances in containerization, WebGPU, and zero-knowledge validation make this implementation far more feasible than previous distributed computing efforts. Projects like WebGPU and TensorFlow.js have already shown browser-based GPU computation is viable with reasonable security boundaries.

David vs. Goliath

Let's put this in perspective by comparing our distributed consumer GPU pool to major AI providers:

| Entity | Estimated AI Compute (exaFLOPS FP16) | Memory Capacity (PB) |

|---|---|---|

| Distributed Consumer GPUs (theoretical) | 200-300 | 60-80 |

| Google Cloud (estimated) | 30-50 | 1-3 |

| Microsoft Azure (estimated) | 20-40 | 1-2 |

| AWS (estimated) | 25-45 | 1-3 |

| xAI (reported) | ~10 | ~0.8 |

Even with modest participation—say 20% of consumer GPUs for 8 hours daily—the effective capacity would still be competitive with major cloud providers:

Effective exaFLOPS = 200 exaFLOPS × 0.2 (participation) × 0.33 (8hr/day) ≈ 13.2 exaFLOPS

That's a significant new source of AI computing capacity in a market currently experiencing persistent shortages.

Why I would never bother trying to make this a reality at scale

I think there is a compelling case to be made for exploring a way to tap into and monetize idle GPUs on consumer PCs and desktop workstations. I think the advantages over centralized data center infrastructure are there, particularly when it comes to resiliance, geopolitical interference, supply disruption, spot pricing, etc. I also thought this would be good for content distribution though. It turned out that the ability to fine tune technology centrally will, rather quickly solve the demand curve and the price approaches zero over time.

Google will continue to generate new hardware that uses 50% less power, has 10X more memory bandwidth. New models will quickly emerge that do run locally and apps will support it, locally within their own apps. I think this is what Apple is banking on.

There may always be a niche where the millions of idle GPUs are incredibly useful, but the time it would take to get even 1% of the owners to install and run the software and manage their devices in a different way to collect the money, is probably longer than it takes for the next generation of cloud infrastructure and alternatives to emerge and make this increasingly less compelling.

Please though, go, start working on this idea, please make it a reality. I have the GPUs to share. When the zombie apocolypse knocks out the data centers, I still want to be trading Ghibli memes I will need your software and GPU network if I still want to do it from my phone.